The Challenge

Machine vision algorithms have over the past two decades become quite sophisticated, except when they are not sure of what they’re looking for. This presents several challenges:

- determining whether the unknown is a grappling feature on a satellite in space or an anomalous feature on the underside of a ship or a target in satellite imagery.

- finding that unknown object in particularly poor lighting situations, either due to the highly specular lighting in space or to the dark, sediment rich, undersea environment; both environments in which machine vision algorithms are significantly error-prone.

- overcoming substantial latencies in communications between human / machine teams, such as when the operator is working with a satellite’s robotic arm or an undersea vehicle.

Project Details

Target Detection

Our Approach

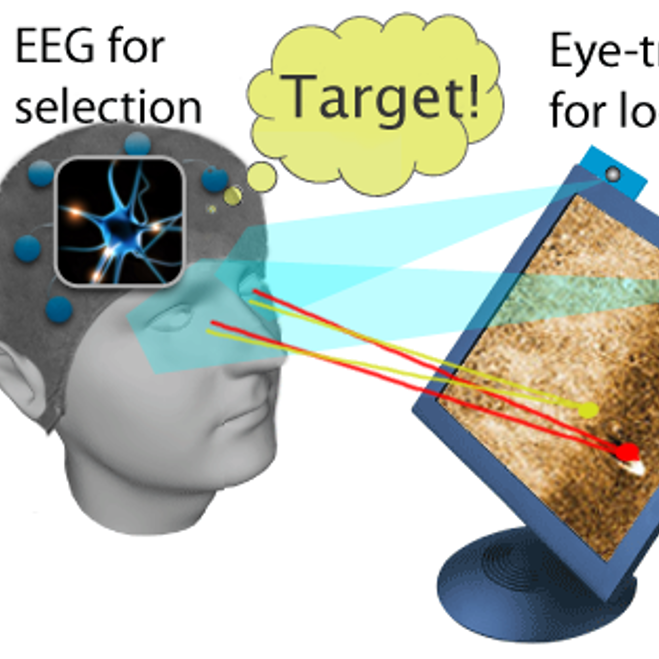

By combining eye tracking technologies and a brain-based selection indicator, the human’s intended point-of-action can be extracted from the scene to capture the sophisticated human ability to identify points in complex scenes at the speed of thought. This project involves the development and evaluation of this eye-brain interface (EBI) system using commercial off-the-shelf eye tracking (maps gaze location and duration) and EEG systems combined with custom algorithms and processing to detect selection triggers from brain-based signals and combine all sensor outputs in real-time.

Such a system will allow a human-robot team to operate together to accomplish a task that requires no overt action by the human, with response times and cognitive workload requirements that rival current state-of-the-art systems, including eye-mouse and eye-keyboard interfaces. Data can also be generated from this system to train machine vision to autonomously mimic human capabilities.